Skeleton Based Action Recognition using Convolutional Neural Network

I have worked on Skeleton Based Action Recognition using Kinect V2 to determine the difference between large bechmark datasets such as PKU-MMD and SBU-Kinect dataset. First I have collected data using Kinect V2 using Matlab and then used a Convolutional Neural Network (CNN) model for prediction. In this post, I will walk you with all the steps I followed and methods I used for this piece of work.

[Source]

[Source]

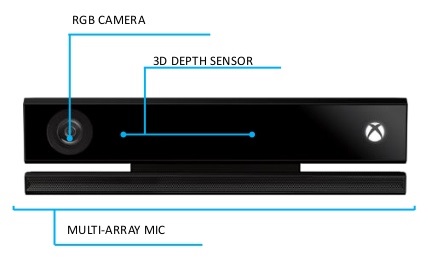

1) Setting up Kinect sensor and checking that it is working properly

I assume that you can connect Kinect device to power source and can connect to PC using USB cable. Now, you need to download Microsoft Kinect SDK 2.0 and install it.

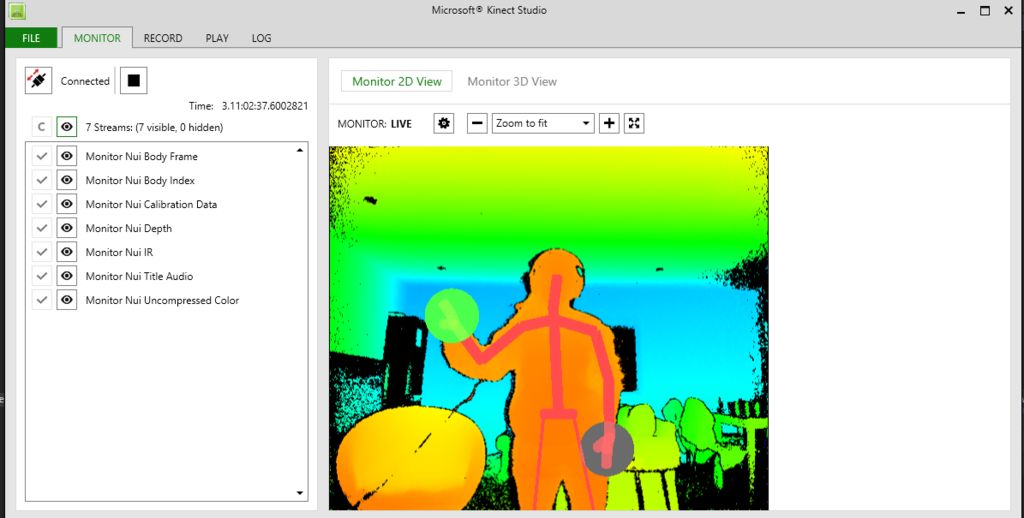

When you successfully install Kinect SDK then you open find it with name "Kinect Studio v2.0" in your search bar and when you open it then it very left corner there will be icon which will display "connect to server" if you hover, you click it and you can see the ouput of Kinect cameras at right hand side.

[Source]

[Source]

2) Connecting Kinect to Matlab

There are number of steps I followed to get data from Kinect in Matlab.

i) First is installation of "Image Acquisition Toolbox" which you can easily install using Matlab Add-Ons option in Home tab. You can easily install as trial version if you don't want subscription.

ii) Now you need to install "Image Acquisition Toolbox Support Package for Kinect for Windows Sensor" which can be also be installed using Add-Ons in Matlab.

iii) Now when you run "imaqhwinfo" command in Matlab command prompt, if everthing is installed properly then it will display Kinect as Installed Adaptors. When you get this then you are all set for getting data from Kinect in Matlab.

2) Getting Data into Matlab

There is good source Skeleton Viewer for Kinect V2 Skeletal Data to follow inorder to see what sort of data you can get by Kinect and how it is stored and you can draw skeleton on top of RGB image.

After running the code given in Matlab link then you open the metadata variable in Maltab and it will contain multiple variables. If you check "IsBodyTracked", it will give you the information that anybody has been tracked or not. It can track six person at a time and if you notice there will be values like [0,0,0,0,0,0] shows no one is tracked and if values like [0,0,0,1,0,1] means two bodies are tracked. The position of 1's doesn't matter here.

Now, if you see "JointPositions" variable, it will give you x,y,z coordinate values in meters of each body joint (Kinect V2 can recognize 25 body joints).

[Source]

[Source]

3) Storing Data

Our goal for this projects was to detect and recognize interaction between 2 persons. First I follow the same procedure as in to connect Kinect and collect 200 frames for each color and depth video objects.

Then I save metadata, colorImg inorder to draw the skeleton representaton on the colorImg (RGB data) by using metadata(depth data) as shown in below figure.

Code snippet to get and save data. I prefer making a dedicated folder to store this data so just to have a clean storage. Such as I use kinect_data folder to save data.

data = metadata; %making copy of data

m = 1; %iterates when a body is tracked in the frame

clear data_joints_values %clearing the variable

for n = 1:framesPerTrig %loop runs for number of frames (e.g, 200)

currentframeMetadata = data(n);

%we first check if anybody is tracked

currentframeanyBodiesTracked = any(currentframeMetadata.IsBodyTracked ~= 0);

%currenttrackedBodies = find(currentframeMetadata.IsBodyTracked);

if currentframeanyBodiesTracked == 1 %if frame is not empty

data_color_joints = data(n).JointPositions; %we get data for each frame

i = 1; k = 2; l = 3; %iteration for x,y,z positions for first person repectively

ii = 76; kk = 77; ll = 78; %iteration for x,y,z positions for second person repectively

for j = 1:25

%saving data for first person

data_joints_values(m,i) = data_color_joints(j,1,trackedBodies(1));

data_joints_values(m,k) = data_color_joints(j,2,trackedBodies(1));

data_joints_values(m,l) = data_color_joints(j,3,trackedBodies(1));

%saving data for second person

data_joints_values(m,ii) = data_color_joints(j,1,trackedBodies(2));

data_joints_values(m,kk) = data_color_joints(j,2,trackedBodies(2));

data_joints_values(m,ll) = data_color_joints(j,3,trackedBodies(2));

i = i+3; k = k+3; l = l+3;

ii = ii + 3; kk = kk + 3; ll = ll + 3;

end

m = m+1;

end

end

data_save = data_joints_values; %variable to save data

dlmwrite(kinect_data\data.txt, ' ') %saving data in file where each value is separated by space

Training and Testing CNN Model

I have followed a paper named Co-occurrence Feature Learning from Skeleton Data for Action Recognition and Detection with Hierarchical Aggregation and also used its source code Github to train UMassd-Kinect dataset.

Howere this code is trained on SBU-Kinect dataset which consists of 15 body joint coordinates while UMassd-Kinect contains 25 body joints so I updated their model to train on 25 body joints. I also updated this code so that it can get data from Matlab at runtime and recognize the action in real time.

Purpose of real time recognition is to present a study which can differentiate between synthetic data (taken in some laboratory or controlled lab environment) and non-synthetic data (real time without considering any lighting, illuminations, background, etc settings). This model seems to give good results on relatively small dataset.

Code

Full code containing matalb and python files is uploaded on github URL.

- Live_Skeleton_Action_Recognition.ipynb

- Co-occurrence Feature Learning for Action Recognition for Multi-View Dataset containing 25 (3D) Body Joints.ipynb

After setting Kinect sensor and connecting it with Matalb code as described in above (1) and (2) steps, you can you use this code for live demo. This code contains the pre-training model (my_model.h5) on UMassd-Kinect dataset and it also calls Matlab function "live_demo.m" to get the data and matlab function initiates Kinect sensor to get the data at run time and sends it back to jupyter file. Then model gives the prediction results.

However, if you want to do the training by yourself then you can use this code to train the model from sketch. It uses data given under "data" folder which contains five classes (hand shake, point finger, giving copy, hug and tap back) taken by 5 different subjects and considered three different angles and backgrounds with variations. Dataset is also provided with train and test split. You can also use anyother dataset for training and testing the model.

Continue