Finding Achilles' Heel: Adversarial Attack on Multi-modal Action Recognition

Abstract

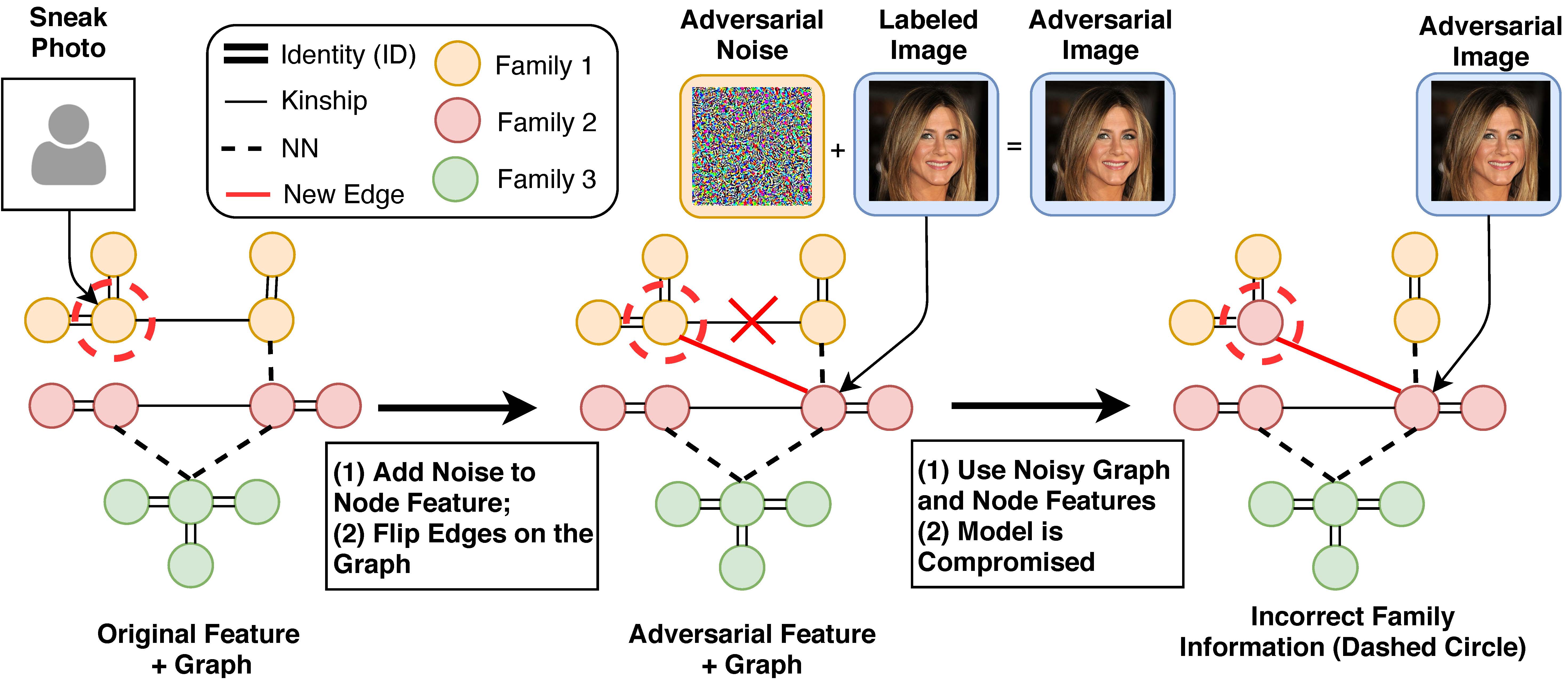

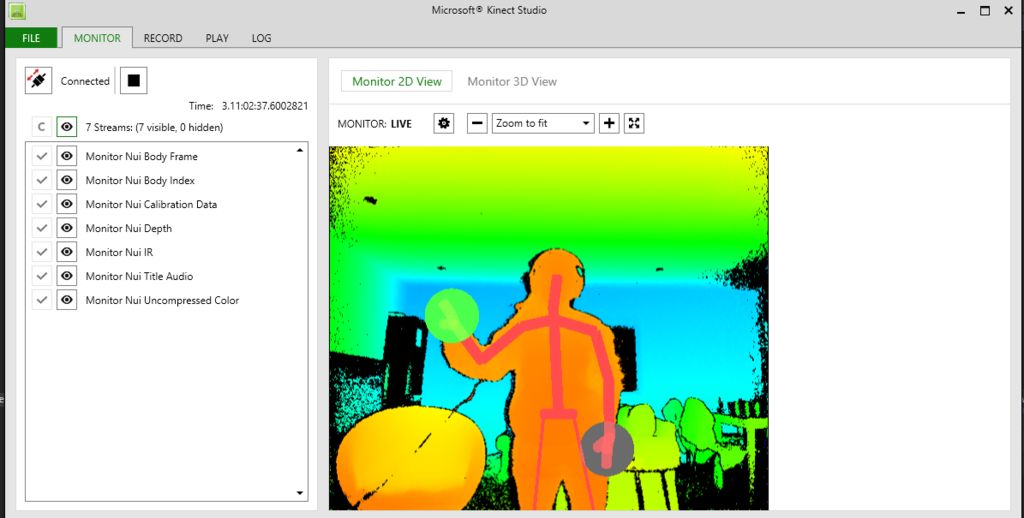

Neural network-based models are notoriously known for their adversarial vulnerability. Recent adversarial machine learning mainly focused on images, where a small perturbation can be simply added to fool the learning model. Very recently, this practice has been explored in human action video attacks by adding perturbation to key frames. Unfortunately, frame selection is usually computationally expensive in run-time, and adding noises to all frames is unrealistic, either. In this paper, we present a novel yet efficient approach to address this issue. Multi-modal video data such as RGB, depth and skeleton data have been widely used for human action modeling, and they have been demonstrated with superior performance than a single modality. Interestingly, we observed that the skeleton data is more "vulnerable" under adversarial attack, and we propose to leverage this "Achilles' Heel" to attack multi-modal video data. In particular, first, an adversarial learning paradigm is designed to perturb skeleton data for a specific action under a black box setting, which highlights how body joints and key segments in videos are subject to attack. Second, we propose a graph attention model to explore the semantics between segments from different modalities and within a modality. Third, the attack will be launched in run-time on all modalities through the learned semantics. The proposed method has been extensively evaluated on multi-modal visual action datasets, including PKU-MMD and NTU-RGB+D to validate its effectiveness. [Paper]